Quick answers to the most common questions recruiters ask about detecting and deterring AI-generated applicants.

Fake Candidates

Real Consequences

Navigating AI-Generated Candidates in Modern Recruitment

By 2028, one in four job applicants may be AI-generated. These aren't just exaggerated resumes or mismatched qualifications. We're talking about highly convincing digital personas built to navigate the hiring funnel unnoticed. This forecast is forcing enterprise hiring teams to rethink how they assess authenticity, build trust, and protect their organizations from emerging risks.

AI isn’t new to recruiting. It’s no surprise that modern job seekers are increasingly relying on AI tools to fine-tune resumes or tailor applications—especially in roles where digital fluency is a prerequisite. What’s changed is the complexity of the candidate pool, and how difficult it’s become to distinguish authentic applications from synthetic ones.

Today’s AI-generated candidates exist on a spectrum:

- AI-assisted applicants: Real people using AI tools to improve resumes or cover letters. (Legitimate)

- AI-automated submissions: Applicants who use AI to generate applications in bulk with minimal oversight. (Risky)

- Synthetic identities: Entirely fake candidates, often supported by deepfake videos and fabricated credentials. (Fraudulent)

This piece explores how AI is impacting recruitment, how to spot the differences between fakes and the real thing, and how talent acquisition (TA) leaders can respond by updating their systems, asking better questions of their vendors, and adding safeguards that stop bad actors early, without over-correcting or slowing down real candidates.

How Did AI-Generated Candidates Become a Recruiting Threat?

The rise of AI in recruiting started with simple optimization tools designed to save time and reduce manual effort (like AI note takers). Over time, capabilities advanced:

- Keyword tools helped real candidates optimize their resumes to rank higher in applicant tracking systems (ATS), often by mirroring language from job descriptions.

- Text generators began writing convincing resumes and cover letters.

- Image generators produced realistic headshots.

- Deepfake tech now enables real-time video interviews, in which an AI-generated face and voice simulate a human candidate, often responding convincingly to live questions.

The combination of AI-powered application tools, remote hiring, and automated candidate screening has opened the door to a new recruiting risk: coordinated, scalable fraud. In practice, this means individuals or fraud networks can now generate multiple fake identities, each built to appear legitimate and often complete with documents, visuals, and interview-ready responses generated by AI. These synthetic personas are used to flood job boards and application portals, exploiting systems that rely on speed and scale.

Instead of one-off scams, fraudsters are rotating between personas, tweaking resume content and timing to avoid detection. Some use voice modulation to mask accents, while others rely on AI-generated visuals to pass video screens. Without in-person verification or deep funnel scrutiny, it becomes much harder to detect what’s fake.

Barriers to entry are low. Anyone can input a job description into a public large language model (LLM), generate a synthetic resume and cover letter, and apply to roles without revealing their real identity. As experimentation grows, the boundary between acceptable AI-assisted enhancement and deliberate impersonation continues to blur.

Imagine this: a recruiter receives a polished resume, a strong cover letter, and a clean video interview from a seemingly ideal candidate. The process feels smooth, especially in a fully remote setup where no one meets the candidate in person. But within weeks, the truth begins to surface. Professional certifications can’t be verified. ID numbers don’t match the systems they’re entered into. Unusual login patterns raise flags. What looked like a successful hire turned out to be a synthetic identity. By this point, the risk has already been introduced.

For talent teams operating at scale, particularly in remote or compliance-heavy industries, this isn’t theoretical. It’s already happening—and becoming more difficult to spot with every iteration of the tools involved.

What Risks Do Synthetic Applicants Pose to Operations and Brand?

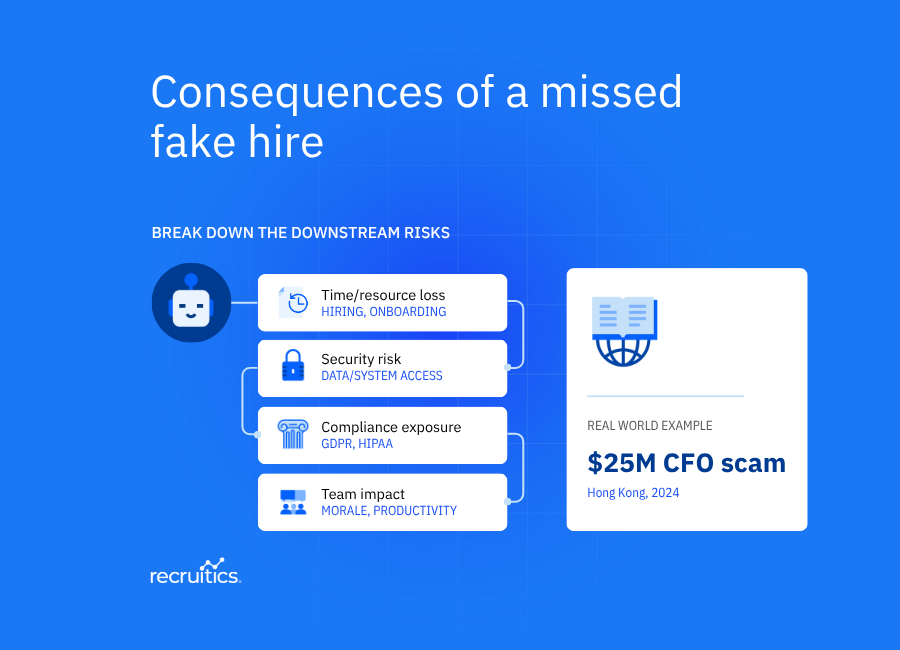

Every misstep in screening AI-generated candidates introduces downstream costs:

- Time and resource loss: From recruiter hours to onboarding expenses

- Security risks: If a fake candidate is hired, they may gain access to internal systems, sensitive business data, or customer records—creating serious cybersecurity vulnerabilities.

- Compliance exposure: Regulatory frameworks like GDPR or HIPAA require companies to safeguard personal and sensitive data. Hiring a fake candidate—intentionally or not—can result in violations, fines, or loss of certification if that individual gains unauthorized access.

- Team impact: Morale drops and momentum stalls when projects are derailed by a fake contributor

The damage doesn’t stop there. Clients expect consistency and control. Hiring a fake candidate undermines both, raising questions about governance, IT security, and brand integrity.

In high-trust sectors like finance, healthcare, and SaaS, these missteps aren’t just costly. They pose systemic threats.

A fraudulent healthcare admin could alter medical records or compromise patient privacy. A hired developer posing under a false identity—as seen in recent cases involving North Korean operatives—could exfiltrate source code or introduce hidden backdoors. In finance, bad actors who pass initial checks or exploit gaps in verification protocols can impersonate executives or authorize fake transactions, like the recent deepfake CFO scam that cost a Hong Kong company $25 million.

This is not simply about poor-fit hires. It's about exposure at the organizational level. The cost of undoing these mistakes can far outweigh the time it would have taken to detect them earlier.

How Can Recruiters Identify AI-Generated or Deepfake Applicants?

Spotting fakes is increasingly difficult, but not impossible. Knowing what to look for and layering tools with human oversight can make a meaningful difference.

In applications, look for:

- Resumes that are overly polished or oddly generic

- Work histories that conflict with public timelines

- Cover letters with vague or templated phrasing

Supplement with context-aware AI tools like GPTZero or Originality.AI, but don’t rely on them alone. They are most effective when paired with trained human reviewers who understand industry norms and candidate patterns.

In interviews, be alert to:

- Lip-sync issues, lag, or unusual lighting: These can be signs of a deepfake video, where a synthetic face is layered over a real person to conceal identity.

- Delays or hesitance when asked for live ID or on-camera tasks: Fraudulent applicants may need time to adjust filters or may avoid spontaneous requests that reveal inconsistencies.

- Responses that are coherent but subtly off-topic: AI-generated responses may sound polished but often lack contextual relevance, especially when asked nuanced or follow-up questions.

Pay attention to communication behaviors, too. Overuse of email over video, evasiveness in scheduling, or lack of specificity in follow-ups can signal something is off. Even the timing and formatting of emails can reveal patterns worth flagging.

Recruiters don’t have to manage this alone. Strong collaboration with IT and security teams brings critical support. These teams are often better equipped to detect red flags like unusual login activity, credential mismatches, or deepfake indicators. Together, they help ensure identity verification becomes part of a broader, organization-wide risk mitigation strategy.

Which Safeguards

Stop Synthetic Identities

Before the Offer Stage?

Protecting your funnel from AI-generated candidates isn't just about identifying suspicious profiles. It's about evaluating the tools, systems, and processes your team relies on to make hiring decisions, and understanding where those systems may be vulnerable.

TA leaders should assess the tools in their hiring tech stack—especially AI-enabled sourcing, screening, or scoring platforms—by asking:

- Can our AI models explain or justify why a candidate was flagged or prioritized?

- Are we using up-to-date and trustworthy data sources to evaluate applicants?

- What alerts do we receive when candidate behavior or platform outcomes deviate from the norm?

- Do we have visibility across platforms to recognize patterns that may signal fraud?

These vulnerabilities can creep in quietly, especially when teams rely heavily on automation without understanding how these systems make decisions. A model may surface high-scoring candidates without context, missing signals that a profile is artificially optimized or fully synthetic.

This is why this next step is so important. Addressing these gaps requires more transparency, better tooling, and team-wide awareness of where weaknesses exist. A small investment in process audits today can prevent a high-impact failure tomorrow.

How Does Recruitics Detect Synthetic Applicants and Improve Funnel Quality?

Recruitics is more than a fraud detection tool. As a recruitment marketing platform, it helps organizations attract better talent, reduce wasted spending, and gain full visibility into performance—all while introducing smart safeguards that improve quality.

One way Recruitics supports fraud prevention is by embedding positive friction into the hiring funnel. These are fast, lightweight checks designed to verify authenticity without slowing down legitimate candidates.

Examples include:

- Location-based validation to ensure alignment with job requirements

- ID prompts or credential confirmation during application flows

- Monitoring traffic quality by source to flag unusual activity patterns

These features are part of Recruitics' broader platform capabilities:

- Precision targeting: Programmatic job advertising that reaches high-intent candidates and filters out low-quality traffic

- Funnel analytics: Real-time insights that surface anomalies in application behavior

- ApplyAnywhere™: A seamless application experience that includes built-in, subtle verification steps

- Human + machine coordination: Automation supports scale, while hiring teams investigate flagged patterns

This isn’t about creating roadblocks. It’s about eliminating noise, reducing risk, and allowing recruiters to focus on what matters most: handling qualified, verified applications with care.

While Recruitics focuses primarily on the top of the funnel, recruiters can partner with IT and security teams to extend these protections further downstream. This helps to close gaps and maintain trust throughout the hiring journey.